Artificial Intelligence continues to accelerate, but few breakthroughs capture as much attention as Baidu’s ERNIE-4.5-21B-A3B-Thinking. This compact Mixture of Experts (MoE) model is not just another large-scale system—it is built specifically for deep reasoning, efficiency, and scalable performance. Designed to balance raw computing power with intelligent routing of tasks, it represents a turning point in how AI systems process information and make decisions.

What Makes ERNIE-4.5-21B-A3B-Thinking Different?

The ERNIE family has long been Baidu’s flagship project in the race to advance AI. The 4.5-21B-A3B-Thinking version takes this a step further by creating a model that is both smaller in scale yet more intelligent in reasoning ability.

Unlike most large models that brute-force their way through massive parameter activations, ERNIE relies on a Mixture of Experts design. This means that for each input, only the most relevant parts of the model are activated, keeping the system lightweight while still producing highly accurate results.

At its heart, this approach allows the model to think step by step, draw logical conclusions, and integrate knowledge in ways that many traditional large language models struggle to achieve.

Core Features of ERNIE-4.5-21B-A3B-Thinking

1. Smarter Use of Parameters:

The model contains 21 billion parameters, but it doesn’t fire all of them at once. Instead, it applies dynamic expert routing, which carefully selects the most relevant parameters depending on the task. This creates both speed and efficiency without sacrificing performance.

2. Built-In Reasoning Capabilities:

The “Thinking” component is more than branding—it’s a genuine improvement. The model is trained to handle multi-step reasoning, logical deduction, and complex problem-solving instead of producing surface-level answers.

3. Knowledge Integration:

As part of the ERNIE lineage, this model continues to excel at blending external knowledge bases with its own learning. That means answers are not just fluent but also factually grounded and context-aware.

4. Compact but Scalable:

Because of its MoE design, the model is energy-efficient, reducing both hardware costs and power consumption. For enterprises and researchers, this makes deployment far more accessible.

Why ERNIE-4.5-21B-A3B-Thinking Matters

Many AI systems today excel at generating text but falter when asked to reason deeply or solve multi-layered problems. This is where Baidu’s model shines. By focusing on reasoning pathways and structured knowledge use, ERNIE offers several clear advantages:

- Sharper logical accuracy in mathematics, science, and policy-related tasks.

- Lower operating costs thanks to selective expert activation.

- Better factual grounding, avoiding hallucinations that plague other large models.

- Broader applications in sectors like finance, healthcare, and law, where logical clarity is essential.

Real-World Applications

Conversational AI:

Customer support systems, enterprise chatbots, and digital assistants benefit from deeper reasoning abilities, allowing them to move beyond scripted interactions.

Healthcare:

With its access to structured medical data, ERNIE can support diagnostic assistance, treatment analysis, and personalised patient guidance.

Finance:

Financial analysts and institutions can rely on its reasoning strengths for risk evaluation, market forecasting, and investment strategies.

Legal and Policy Analysis:

The model’s fact-based reasoning makes it valuable for legal research, compliance checks, and government policy planning.

Research and Education:

Academics and scientists can apply its step-by-step problem-solving to mathematics, physics, and complex scientific modelling.

Comparisons with Global Competitors

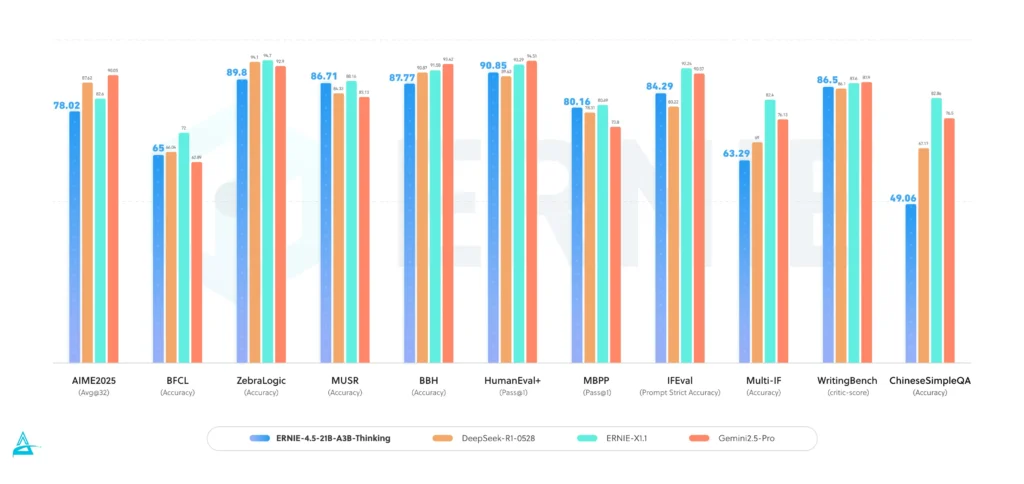

Baidu’s ERNIE-4.5-21B-A3B-Thinking is often measured against OpenAI’s GPT-4, Anthropic’s Claude, Google Gemini, and DeepSeek R2. Each has strengths, but ERNIE takes a unique position:

- GPT-4: Strong in general fluency, but ERNIE offers more efficient reasoning through MoE.

- Claude: Known for safety and alignment, but ERNIE integrates knowledge graphs more effectively.

- Gemini: Excels in speed and multimodal use, while ERNIE doubles down on logical depth.

- DeepSeek R2: Marketed for efficiency, yet ERNIE combines both efficiency and advanced reasoning.

This balance between cost, reasoning ability, and adaptability positions ERNIE as one of the most practical models available.

Looking Ahead: The Future of MoE Reasoning

The success of ERNIE-4.5-21B-A3B-Thinking signals where AI is headed:

- Smarter, not just bigger models.

- Cost-efficient systems that businesses can actually deploy.

- AI that doesn’t just “answer” but reasons and explains.

- Wider adoption across industries where accuracy and reasoning outweigh speed alone.

We are entering a stage where compact MoE models may set the new standard, blending efficiency with intelligence in a way that benefits research, industry, and everyday applications.

Conclusion:-

The release of Baidu ERNIE-4.5-21B-A3B-Thinking shows how AI can evolve beyond sheer scale into precision, intelligence, and usability. With its 21 billion parameters, efficient MoE routing, and built-in reasoning engine, it bridges the gap between massive AI systems and the practical needs of businesses and researchers.This model isn’t just a technical milestone—it’s a clear indication that the future of AI will prioritise smarter reasoning over brute computational power.